Can LLMs Learn to Map the World from Local Descriptions?

LLMs learning spatial cognition and navigation from local descriptions in urban environments.

LLMs learning spatial cognition and navigation from local descriptions in urban environments.

Abstract

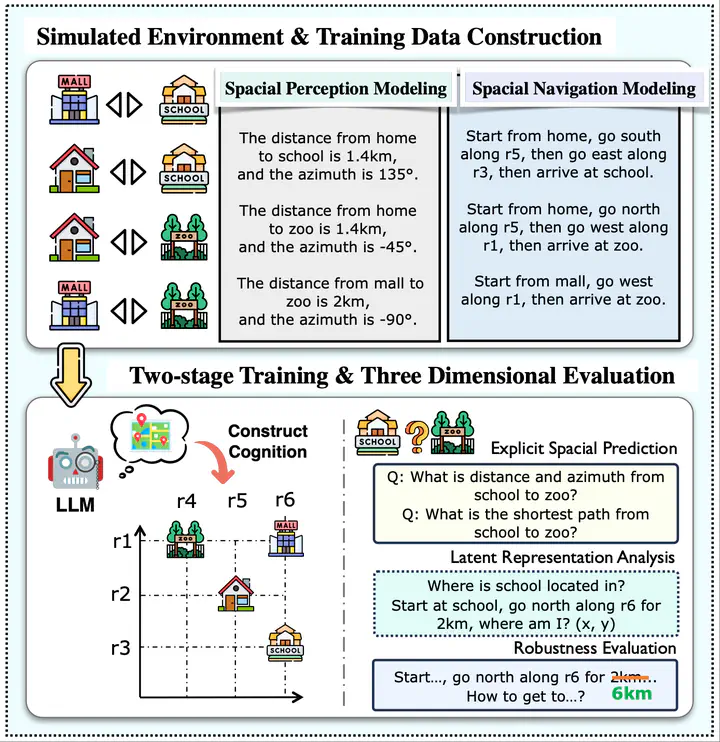

This study investigates whether Large Language Models can develop spatial cognition by integrating fragmented relational descriptions from local human observations. The research focuses on two core aspects: spatial perception (inferring global layouts from local positional relationships) and spatial navigation (learning road connectivity from trajectory data for optimal path planning). Experiments in simulated urban environments demonstrate that LLMs can generalize to unseen spatial relationships between points of interest and exhibit latent representations aligned with real-world spatial distributions. The models successfully learn road connectivity from trajectory descriptions, enabling accurate path planning and dynamic spatial awareness during navigation.