SelfGoal: Your Language Agents Already Know How to Achieve High-level Goals

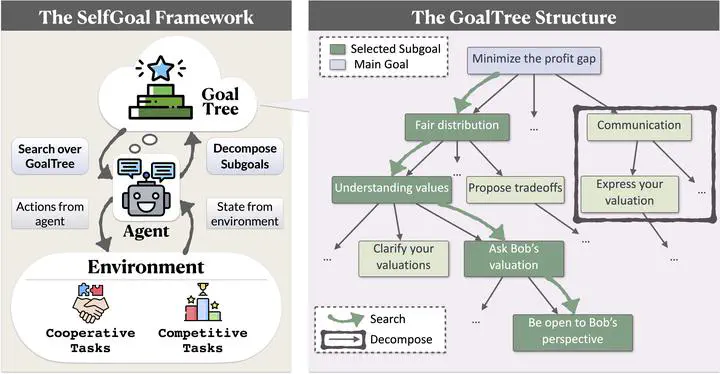

An overview of SelfGoal, illustrated with a bargaining example. The agent interacts with environments, and make actions based on environmental feedback and the GoalTree dynamically constructs, utilizes and updates with Search and Decompose Modules.

An overview of SelfGoal, illustrated with a bargaining example. The agent interacts with environments, and make actions based on environmental feedback and the GoalTree dynamically constructs, utilizes and updates with Search and Decompose Modules.

Abstract

Language agents powered by large language models (LLMs) are increasingly valuable as decision-making tools in domains such as gaming and programming. However, these agents often face challenges in achieving high-level goals without detailed instructions and in adapting to environments where feedback is delayed. In this paper, we present SelfGoal, a novel automatic approach designed to enhance agents’ capabilities to achieve high-level goals with limited human prior and environmental feedback. The core concept of SelfGoal involves adaptively breaking down a high-level goal into a tree structure of more practical subgoals during the interaction with environments while identifying the most useful subgoals and progressively updating this structure. Experimental results demonstrate that SelfGoal significantly enhances the performance of language agents across various tasks, including competitive, cooperative, and deferred feedback environments.