Character is Destiny: Can Large Language Models Simulate Persona-Driven Decisions in Role-Playing?

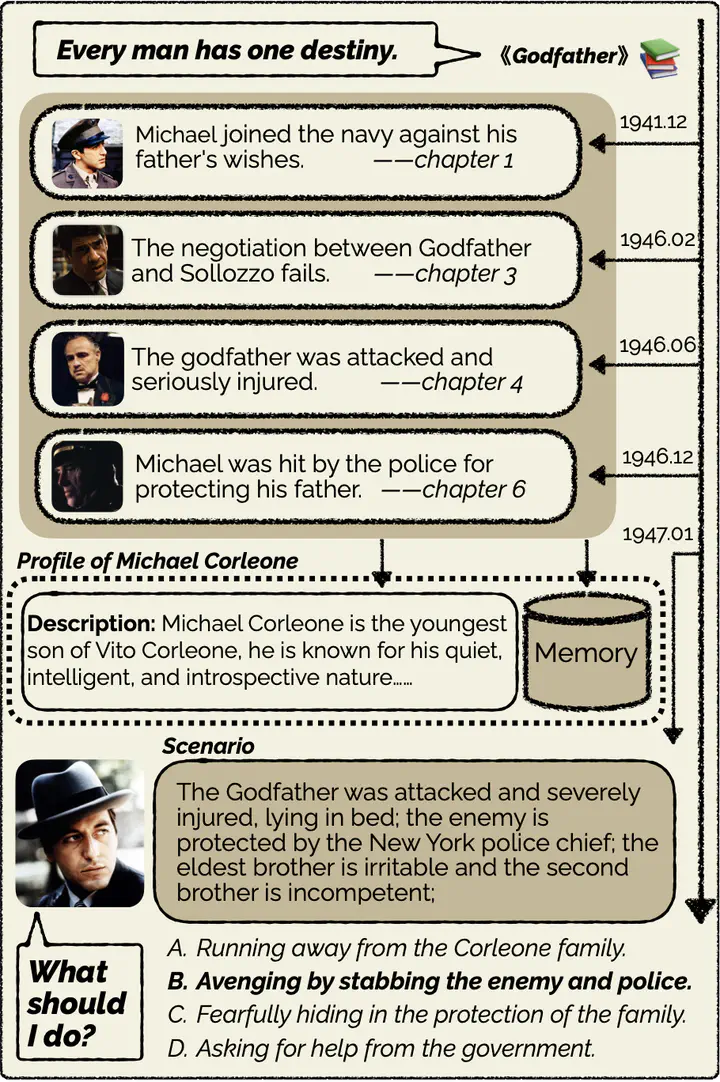

An example of the proposed task. LLMs generate a profile and memory from the historical data before the decision node in the storyline and then make life choices from the perspective of the character under the current scenario.

An example of the proposed task. LLMs generate a profile and memory from the historical data before the decision node in the storyline and then make life choices from the perspective of the character under the current scenario.

Abstract

Can Large Language Models (LLMs) substitute humans in making important decisions? Recent research has unveiled the potential of LLMs to role-play assigned personas, mimicking their knowledge and linguistic habits. However, imitative decision-making necessitates a more nuanced understanding of personas. In this paper, we benchmark the ability of LLMs in persona-driven decision-making. Specifically, we investigate whether LLMs can predict characters’ decisions provided with the preceding stories in high-quality novels. Leveraging character analyses written by literary experts, we construct a dataset LIFECHOICE comprising 1,401 character decision points from 395 books. Then, we conduct comprehensive experiments on LIFECHOICE, with various LLMs and methods for LLM role-playing. The results demonstrate that state-of-the-art LLMs exhibit promising capabilities in this task, yet substantial room for improvement remains. Hence, we further propose the CHARMAP method, which achieves a 6.01% increase in accuracy via persona-based memory retrieval. We will make our datasets and code publicly available.