KORGym: A dynamic game platform offering over fifty games for comprehensive LLM reasoning evaluation

KORGym: A dynamic game platform offering over fifty games for comprehensive LLM reasoning evaluation

Abstract

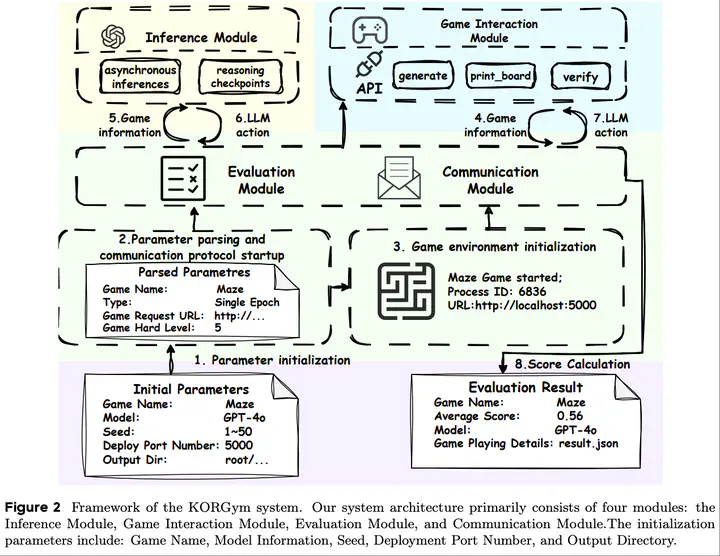

Existing benchmarks are often domain-specific and thus cannot fully capture an LLM’s general reasoning potential. KORGym offers over fifty games in either textual or visual formats and supports interactive, multi-turn assessments with reinforcement learning scenarios. The research involved testing 19 LLMs and 8 VLMs, revealing consistent reasoning patterns within model families and demonstrating the superior performance of closed-source models. The platform examines various factors affecting model performance including modality, reasoning strategies, reinforcement learning techniques, and response length.

Type

Publication

In The Thirty-Ninth Annual Conference on Neural Information Processing Systems (NeurIPS 2025), Spotlight