AnalogyKB: Unlocking Analogical Reasoning of Language Models with A Million-scale Knowledge Base

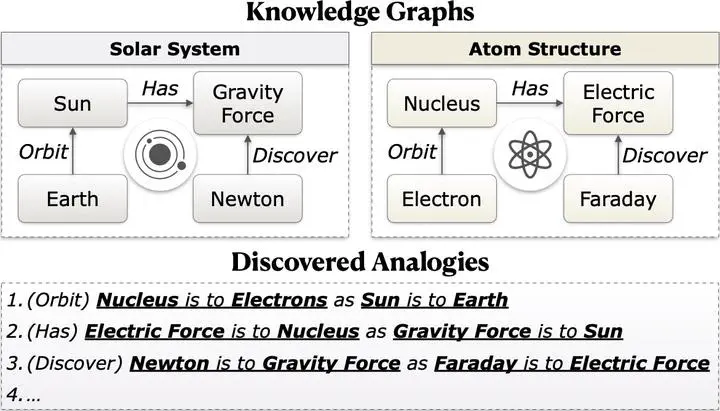

An example of acquiring analogies from knowledge graphs.

An example of acquiring analogies from knowledge graphs.

Abstract

Analogical reasoning is a fundamental cognitive ability of humans. However, current language models (LMs) still struggle to achieve human-like performance in analogical reasoning tasks due to a lack of resources for model training. In this work, we address this gap by proposing AnalogyKB, a million-scale analogy knowledge base (KB) derived from existing knowledge graphs (KGs). AnalogyKB identifies two types of analogies from the KGs: 1) analogies of the same relations, which can be directly extracted from the KGs, and 2) analogies of analogous relations, which are identified with a selection and filtering pipeline enabled by large LMs (InstructGPT), followed by minor human efforts for data quality control. Evaluations on a series of datasets of two analogical reasoning tasks (analogy recognition and generation) demonstrate that AnalogyKB successfully enables LMs to achieve much better results than previous state-of-the-art methods.